We trust cameras to record our memories. But they need to understand what life really looks like

Ever since digital cameras and smartphones came along, we’ve been taking more pictures than we can easily manage, but often we still don’t have photos of moments we want to remember, either because we’re busy enjoying them – or because some people tend to take the photos rather than being in them. That’s what lifecams promise to take care of.

The point of having a camera that takes pictures for you isn’t to replace the kind of carefully composed images where you think hard about the picture you want to take. It’s more about capturing moments of your life and leaving you free to enjoy them without thinking about pulling out the camera. If you want to document your life in detail to help you remember it in the future (perhaps when your memory isn’t as good as it once was), or if you’re always the person taking the photos and never the person getting their photo taken, the idea might seem appealing.

There are potential privacy issues of having an always-on device snapping away without warning, as well as the etiquette questions around whether you warn visitors and give them the option not to be on candid camera. These are much like the issues with smart glasses disguised as ordinary sunglasses, which make many people feel uncomfortable – and the many photos and videos of, say, ‘cheese-and-wine’ parties that we’ve all been poring over in recent weeks underline the reasons why having photos automatically snapped when someone is doing something interesting isn’t always a good thing.

SEE: Your cybersecurity training needs improvement because hacking attacks are only getting worse

And what about retrieving a photo you didn’t know you had because you didn’t take it? Will all your friends (and data regulators around the world) be comfortable with not just facial recognition but the other data mining you’d need to do to be able to bring up a photo of Aunt Beryl sitting at your kitchen table on a summer afternoon some time in the past 10 years?

Do you want the camera to know about the weather outside with a feed from your smart weather station, be able to sniff which phones are connected to your home Wi-Fi or to sample the air in the kitchen (because smell is one of the most evocative senses, you might remember that you were baking cookies more easily than what year it was)? How about tracking down someone whose name you don’t remember?

The question of what makes an interesting photo is also a little fraught, because to be useful, these lifecams need software good enough to pick out the worthwhile images from the hundreds of dud snaps of people turning away, blinking or just sitting there. Think of never being sure whether you’re not able to find a photo of a memorable day because you haven’t got the right search terms or because the AI didn’t detect anything worth taking a picture of.

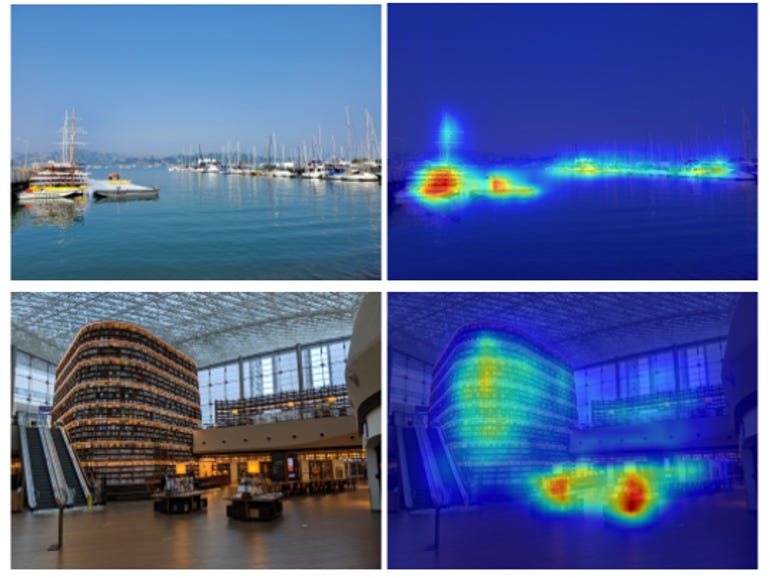

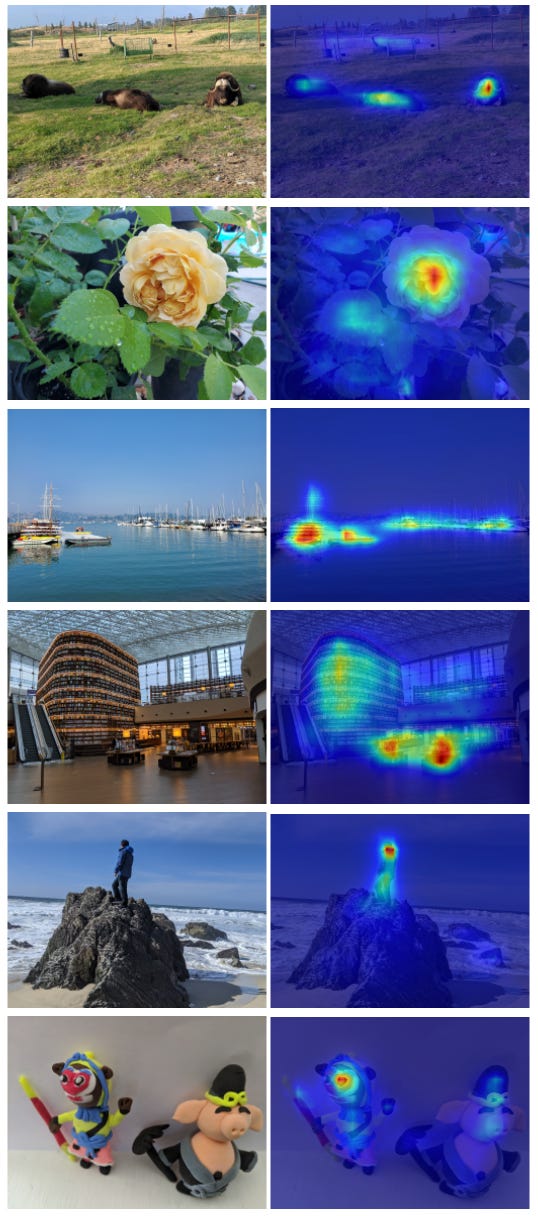

The technical term for ‘interesting’ is saliency, and saliency algorithms have bias that we’re only just starting to look at. When you look at a picture, you don’t look at the whole picture at once: your eye goes to what your brain sees as most interesting. Google uses that as the basis of its new image format, where the part of the image that a machine-learning model predicts is most interesting downloads first: the flower rather than the leaves around it, the eyes and mouth rather than the wall behind someone’s head.

Google’s machine-learning models learn what we look at in pictures, but that gaze can have bias.

The saliency models that decide what part of the image to download first or how to automatically crop an image without losing visual impact – or what photos to take in the first place – are directing your gaze as well as responding to what people look at first. As many of us have noticed from endless video meetings over the past 18 months, we tend to look at other people’s faces first, but if you see a photo of someone looking at something, you’ll look at what they’re looking at.

And like many machine-learning models trained by just looking at what people say and do, saliency models encode all the bias of those people, applied automatically, at scale. When Twitter invited researchers to see if there was a bias in its saliency-driven image-cropping algorithm, it found so many problems that – instead of trying to redesign the algorithm – it’s just getting rid of automatic cropping. The research that won the bug bounty shows the algorithm thinks that young, thin, pretty, white, female faces matter the most. Make someone’s skin lighter and smoother, make their face look slimmer, younger, more stereotypically feminine and generally more conventionally attractive and the algorithm will crop the photo to highlight them. It tends to cut out people with white hair, as well as people in wheelchairs.

That means, if we’re using smart cameras to capture photos of what matters in our lives, we need to make sure that it is not trained to ignore grandparents, friends in wheelchairs or anything else that doesn’t match who and what we’ve accidentally taught it to look at and value because we might not realise until we look through those photos, months or years – or decades – later.